Jiayu (Jerry) Wang

I'm a Technology Strategic Planning at Huawei. At present, I am engaged in AI

product and

technology planning, as well as exploration and research of new directions for electronic

consumer products.

I finished my PhD at Tsinghua

University, advised by

Prof.

Yu

Zhu and Prof. Chuxiong Hu.

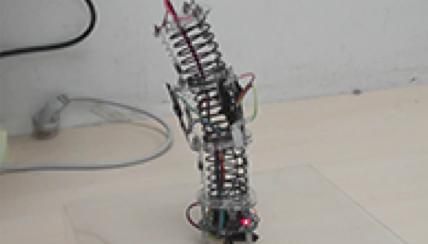

My interests lie at the intersection of AGI and robotics. I was a co-founder of KEYI

Technology which created the first commercial modular robotic kit in the world for

entertainment and education.